KUALA LUMPUR, June 4 — It finally happened; while wearing my contact lenses, I could not read text on my phone at all.

Once your eyes start to age, you become that person who starts holding their phone various distances or, in my case, propping my eyeglasses up on my head just so I can read the latest spam SMS asking “Do you need a part time job?” You can’t do that with contact lenses so my solution has been to turn on the Zoom feature in iOS. It’s accessible via System>Accessibility>Zoom.

What it effectively does is let me tap on my screen with three fingers to zoom in and drag the screen around with three fingers to look around.

Zoom has a lot of other choices that are particularly useful to those with low vision.

You can, for instance, set a filter to invert the colours, to switch to greyscale or even low light options.

Apple has made Accessibility features a key part of their product offerings, even highlighting them during product launches.

Most recently the company previewed new Accessibility features for the upcoming iOS 16 and here is what I think so far.

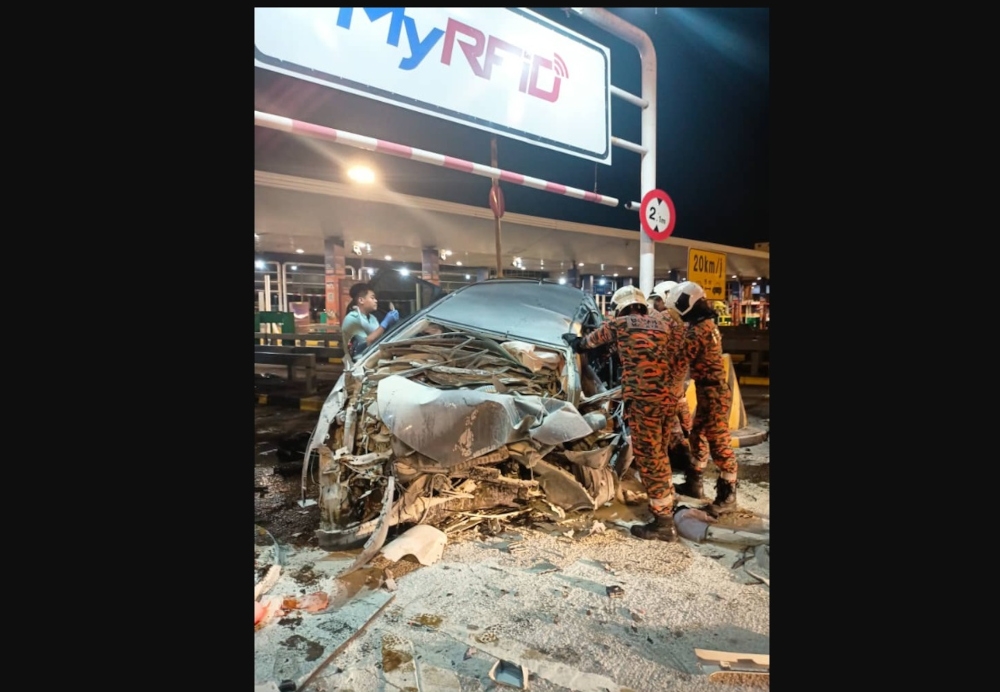

Hello, doors

Door Detection is one of the features being announced that was intended for those with low vision when they’re out and about.

It tells users where a door is and helps them determine what kind of door it is and what you need to do to interact with it, whether it is already open, if you need to push or turn a handle or knob.

The feature will also be able to read signs and symbols around the door so you know that you’re at your Uncle Benny’s apartment and not at his neighbour’s with the very angry chihuahua.

This is made possible via the LiDAR scanner on select iOS devices and it will be part of a Detection Mode in Apple’s Magnifier app.

Magnifier will also support People Detection among other things to help those with low vision better navigate their surroundings.

The Magnifier app is something I have used on occasion as another way to zoom in on things and I tested it in a mock scenario of being vision impaired (which at this rate is in my future).

I unlocked my phone, said “Hey Siri, open the Magnifier app” and pointed it to my chosen subject. Once iOS 16 comes out, I plan to see how well it works with Malaysian doors and how useful it will be out and about.

It’s very simple to use and with Siri being a lot more responsive and better at parsing spoken commands, I think those with low vision would appreciate it.

However, since it relies on the LiDAR scanner that is only available on higher-end iPhones and iPads, it puts it out of the reach for those in lower-income brackets.

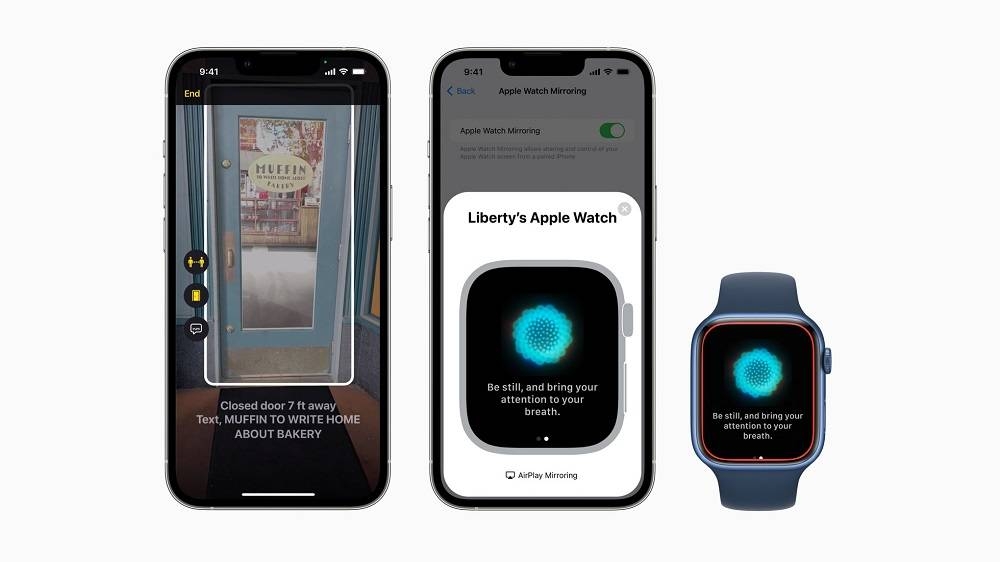

Mirror, Mirror on my Watch

Another coming feature is Apple Watch Mirroring that will let Apple Watch wearers with disabilities control their device from their iPhones, using Voice Control and Switch Control.

Why is this a big deal? Well, if you have motor difficulties, the supposed simple act of just tapping the screen of your watch with your other hand isn’t as easy.

The larger screen of the iPhone is also easier to navigate for those who use eye tracking or external made for iPhone switches.

For those who prefer using hand gestures (as is already supported) to control features on the Apple Watch, new actions have been introduced. For instance: a double-pinch gesture answers or ends a call, plays or pauses media, takes photos as well as other functions.

Having tried the existing gesture support for the Watch, I could see why it makes sense for those who find it difficult to reach over and tap the screen. It’s also fairly energy-saving in that sense.

As someone with carpal tunnel and who is also very lazy at remembering shortcuts, I found you need quite a bit of practice to get used to it but then it was not made for me or my specific requirements.

There lies the challenge — some accessibility features will of course seem far more accessible to certain groups than others.

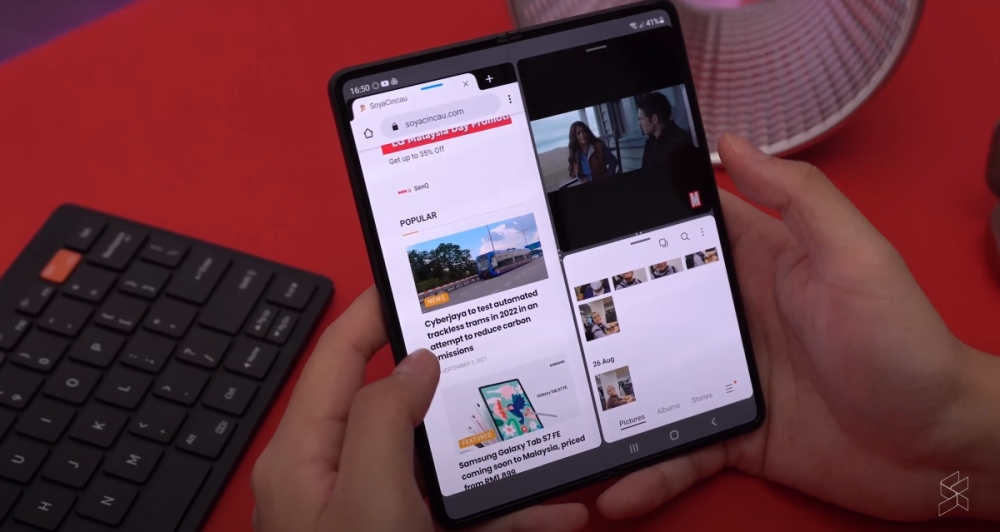

Captions coming to you live

Now a feature that will not just be attractive to those with hearing impairments, but to content producers and consumers, is Live Captions.

Think making a FaceTime call with someone and being able to know what they said and not miss anything if you had to turn away for a second — to make sure your toddler is carrying a toy snake and not a real one — is certainly useful, whether or not you’re hard of hearing.

On the Mac, you can even type your response and have it spoken aloud in real time. This is useful for those with hearing disabilities as even though many can speak, it is often hard for them to do so while conversing, especially in a group setting.

The Live Captions will also remain private and secure as they are auto-generated on the device so people won’t be able to hack into your phone and find your caption logs.

More features will also be added and I hope to have more time looking into what’s available once we get more details about iOS 16, hopefully by the time the WorldWide Developers Conference (WWDC) happens in a couple of days.