SINGAPORE, July 26 — More people here encountered harmful online content in 2024 as compared to last year, but the majority of them ignored it, a government survey showed.

The Ministry of Digital Development and Information (MDDI) — formerly the Ministry of Communications and Information — published the findings yesterday.

The annual Online Safety Poll was done in April with 2,098 Singapore respondents aged 15 and above.

Overall, about three-quarters (74 per cent) of the respondents reported encountering harmful online content such as cyberbullying and violence this year, up from 65 per cent in 2023.

Two-thirds of the respondents said that they encountered such content on six major social media platforms, namely Facebook, HardwareZone, Instagram, TikTok, X (formerly Twitter) and YouTube. This was up from the 57 per cent logged in 2023.

Last July, the Infocomm Media Development Authority (IMDA) designated these platforms under Singapore’s Code of Practice for Online Safety due to their significant reach or impact on the masses.

Under the code, these platforms must put in place measures to minimise users’ exposure to harmful content, including sexual and violent content, content relating to suicide and self-harm, and cyberbullying.

Notably, six in 10 respondents (61 per cent) took no action when encountering such content, the poll found.

About a third (35 per cent) of the respondents blocked the offending account or user, while only a quarter (27 per cent) reported the content to the platform.

Those who chose to ignore harmful content did so for several reasons, with the most common being:

• Not seeing the need to take action

• Being unconcerned about the issue

• Believing that making a report would not make a difference

The poll also found that:

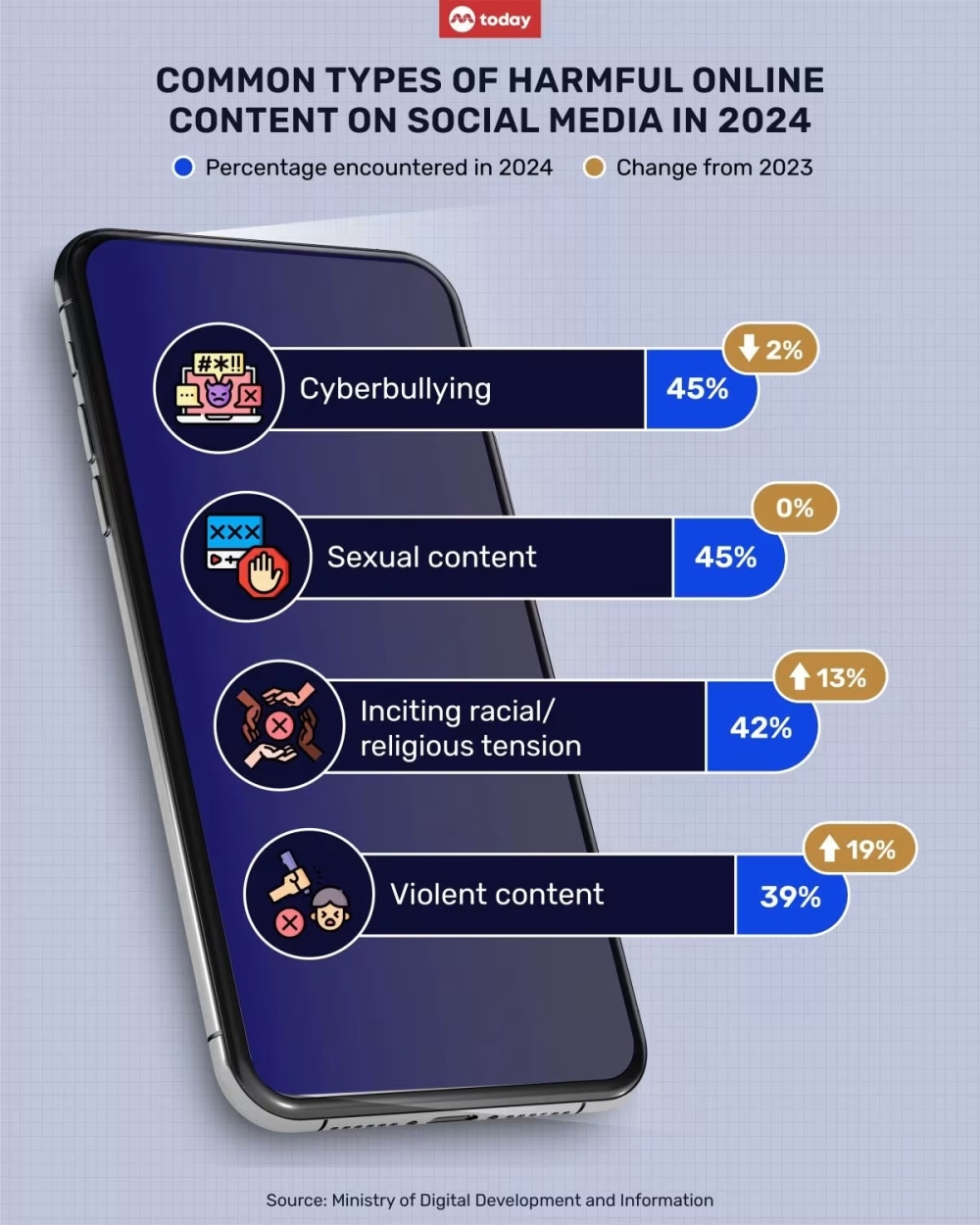

• Encounters with content that incited racial or religious tension rose from 29 per cent in 2023 to 42 per cent in 2024

• Encounters with violent content increased from 20 per cent in 2023 to 39 per cent in 2024

• Cyberbullying and sexual content remained the most prevalent; each category was encountered by 45 per cent of the respondents

Encounters with sexual content remained the same as in 2023, while cyberbullying dipped slightly from last year’s 47 per cent, MDDI said.

Among the six platforms, Facebook was the most mentioned, with 57 per cent of the respondents encountering harmful content there. Instagram was close behind at 45 per cent and TikTok came in third at 40 per cent.

In fourth place was YouTube, with 37 per cent of respondents saying that they encountered harmful content there. X and HardwareZone ranked fifth and sixth, with 16 per cent and 6 per cent of respondents encountering such content on each respective platform.

Harmful content encountered on other platforms apart from the six designated under the code — such as messaging applications, search engines, news sites and gaming platforms — remained the same as last year’s at 28 per cent.

Why it matters

MDDI said that the study aimed to understand the experiences of Singapore users when they come across harmful online content and their actions taken to address such content.

The ministry added that the prevalence of harmful content on Facebook and Instagram may be partially explained by their bigger user base as compared to other platforms, but “also serves as a reminder of the bigger responsibility these platforms bear” to ensure online safety.

Media experts who spoke to TODAY attributed the rise in harmful content encounters to increased online activity and the overall proliferation of content, which social media platforms find hard to moderate.

They also pointed to an increased societal acceptance and normalisation of such material.

Anita Low-Lim, chief transformation officer at Touch Community Services, said that such “desensitisation” to harmful content such as sexually explicit materials can “lower inhibitions and encourage the creation and sharing of increasingly harmful materials”.

Carol Soon, principal research fellow at the Institute of Policy Studies and vice-chair of IMDA’s Media Literacy Council, said that “perpetrators now have increasingly easy-to-use photo and video editing apps and generative AI (artificial intelligence) tools to create offensive, exploitative and deceptive content like fake nudes and deepfake pornography”.

One other reason for the rise in reported encounters could be due to people having more awareness of online harm and their potential negative impacts.

In the past, when there was less discussion on online harm, those who have encountered offensive and disturbing content could have dismissed their own discomfort, rationalising that harmful speech and actions are just part of their online experience, Soon said.

“Greater awareness of online harm among users bodes well as it puts (users) on guard to take precautions for themselves and their loved ones,” she said, adding that this can also nudge vigilant practices such as parents setting up parental controls on devices to minimise their children’s exposure to harmful content.

On users’ “apathy” towards reporting harmful content, Dr Soon said that this finding was concerning and that public education is required to help them understand how harmful content can hurt both the individual and the community.

“This applies to both victims and bystanders. Continued efforts on the part of platforms to improve their reporting mechanisms and more timely removals of harmful content will help boost user efficacy,” she added.

Improve reporting processes

Among the respondents who flagged harmful content to the six designated social media platforms, about eight in 10 said that they had faced issues with the reporting process, including:

• The platforms not taking down the harmful content or disabling the account responsible

• The platforms not providing an update on the outcome

• The platforms allowing the removed content to be reposted

Experts suggested that social media platforms be more open about how they have dealt with violations of safety policies, and remove harmful content promptly to show their commitment to user safety.

Low-Lim said that companies can consider engaging with the media authorities here to understand cultural norms and acceptable content when setting community standards, because “(what) is deemed normal and accepted in one country may be considered offensive or harmful in another”.

“By tailoring community guidelines to specific regions, online platforms can better identify and address harmful content,” she added.

Natalie Pang, associate professor from the department of communications and new media at the National University of Singapore, said that embedding reporting features within the platform’s interface can help users access them more easily.

As part of the requirements under the Code of Practice for Online Safety here, designated social media services are due to submit their first online safety compliance reports by the end of July.

“The IMDA will evaluate their compliance and assess if any requirements need to be tightened,” MDDI said. — TODAY